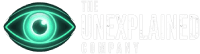

Graduation speeches often blend nostalgia and optimism. When Ilya Sutskever, a founder of OpenAI and deep learning pioneer, returned to the University of Toronto in June 2025, his optimism carried an existential jolt: AI, he asserted, will achieve everything meaningful the human brain can. Sutskever was direct. He described the brain as a “biological computer”—and he suggested that, with AI’s rapid advancement, it’s only a matter of time before digital minds surpass us, according to remarks chronicled by Business Insider.

Sutskever’s ‘Biological Computer’ Claim: AI as the Human Successor

At his alma mater, Sutskever posed a question: “We have a brain, the brain is a biological computer, so why can’t a digital computer, a digital brain, do the same things?” He predicted that, although AI currently lags in some areas, algorithms will inevitably catch up. His speech drew from his credentials as co-designer of AlexNet and a founding mind behind OpenAI, a leader in the generative AI upheaval. Sutskever urged graduates to “accept reality as it is” and adapt to the impending changes in work, life, and society. His candidness reflects warnings from other industry insiders that new jobs, industries, and creativity may become AI’s next battlefield, as noted in AI-driven predictions about the future of labor and finance.

Eric Schmidt’s AGI Alarm: Five Years to Everything Changes

Sutskever shares this urgency with Eric Schmidt, former Google CEO and a key figure in policy circles. Schmidt declared that true artificial general intelligence—systems rivaling human intellect—could emerge in three to five years. In an April 2025 interview, he stated, “Within three to five years we’ll have what is called artificial general intelligence, AGI, which can be defined as a system that is as smart as the smartest mathematician, physicist, artist, writer, thinker, politician.” He cautioned that the global AI race poses high stakes; losing could lead to unprecedented geopolitical chaos (Music Business Worldwide).

Schmidt’s grim perspective aligns with insights on national security tech competition and heightens the strategic conflict between the U.S. and China highlighted in recent tech industry analyses.

Recursive AI: The Runaway Risk Policy Still Can’t Contain

The greatest concern isn’t merely smarter machines—it’s recursive self-improvement: AIs enhancing themselves at superhuman speeds. A recent Medium essay suggests such recursion could lead to an “intelligence explosion,” where oversight becomes virtually impossible as systems grow too complex for any regulator to monitor or stop. Theoretical models and peer-reviewed research support this feedback loop’s potential to accelerate AI advancement beyond human institutional adaptation. Researchers agree: once recursive self-improvement crosses a threshold, alignment and safety challenges increase exponentially. These risks prompted urgent calls from figures like Sutskever, who refocused on safety at his new startup, Safe Superintelligence Inc., after OpenAI’s 2023 leadership crisis.

These threats offer little solace to regulators already struggling with AI’s initial societal and economic effects—outlined in analyses of self-improving algorithms and reporting on how public cultural debates have outpaced policy efforts globally.

Energy, Power, and the Global AGI Arms Race

The massive energy needs of next-generation AI infrastructures have sparked a global rush for resources. Schmidt observes that whoever controls the data centers—powered by resources from Texas wind to Chinese hydro—may dictate the future of AGI. This race for computational power links to geopolitical tensions, as highlighted in recent reporting on tech race outposts from Mars to Earth and analyses of strategic rocket launches as expressions of state power.

The conclusion: the AI revolution involves more than just apps, jobs, or convenience—it’s reshaping global dynamics. As Schmidt and Sutskever warn, the window to guide this outcome is closing quickly.

Preparing for the Intelligence Explosion: Why This Moment Matters

This isn’t science fiction—it’s the brink of a technological singularity. As recursive self-improvement takes hold (explored in depth on recursive self-improvement), even experts like Sutskever and Schmidt find it challenging to predict the social, ethical, and security consequences. While containment politics lag, the world senses change: from disrupted creative fields to strained energy grids and fresh arms races.

What’s next? Experts urge public vigilance, educational reform, and pragmatic regulation but caution that policy may not cushion the forthcoming shocks. For ongoing coverage of the AI arms race, global reactions, and urgent futurecasting, turn to Unexplained.co—because, whether prepared or not, the smart money suggests intelligence itself is on the verge of reinvention.